Generative Adversarial Imitation Learning (GAIL) [1] is a recent successful imitation learning architecture that exploits the adversarial training procedure introduced in Generative Adversarial Networks (GAN) [2]. Albeit successful at generating behaviours similar to those demonstrated to the agent, GAIL suffers from a high sample complexity in the number of interactions it has to carry out in the environment in order to achieve satisfactory performance. We dramatically shrink the amount of interactions with the environment necessary to learn well-behaved imitation policies, by up to several orders of magnitude. Our framework, operating in the model-free regime, exhibits a significant increase in sample-efficiency over previous methods by simultaneously a) learning a self-tuned adversarially-trained surrogate reward and b) leveraging an off-policy actor-critic architecture. We show that our approach is simple to implement and that the learned agents remain remarkably stable, as shown in our experiments that span a variety of continuous control tasks.

Video

Paper + Code

Lionel Blondé, Alexandros Kalousis.

Sample-Efficient Adversarial Imitation Learning via Generative Adversarial Nets

Open-source, available in TensorFlow, PyTorch.

SAM: Sample-Efficient Adversarial Mimic

We address the problem of an agent learning to act in an environment in order to reproduce the behaviour of an expert demonstrator. No direct supervision is provided to the agent (she is never directly told what the optimal action is) nor does she receives a reinforcement signal from the environment upon interaction. Instead, the agent is provided with a pool of trajectories.

The approach in this paper, named Sample-efficient Adversarial Mimic (SAM), adopts an off-policy TD learning paradigm. By storing past experiences and replaying them in an uncorrelated fashion, SAM displays significant gains in sample-efficiency. To solve the differentiability bottleneck of [1] caused by the stochasticity of its generator, we operate over deterministic policies. By relying on an off-policy actor-critic architecture and wielding deterministic policies, SAM builds on the Deep Deterministic Policy Gradients (DDPG) algorithm [4], in the context of Imitation Learning.

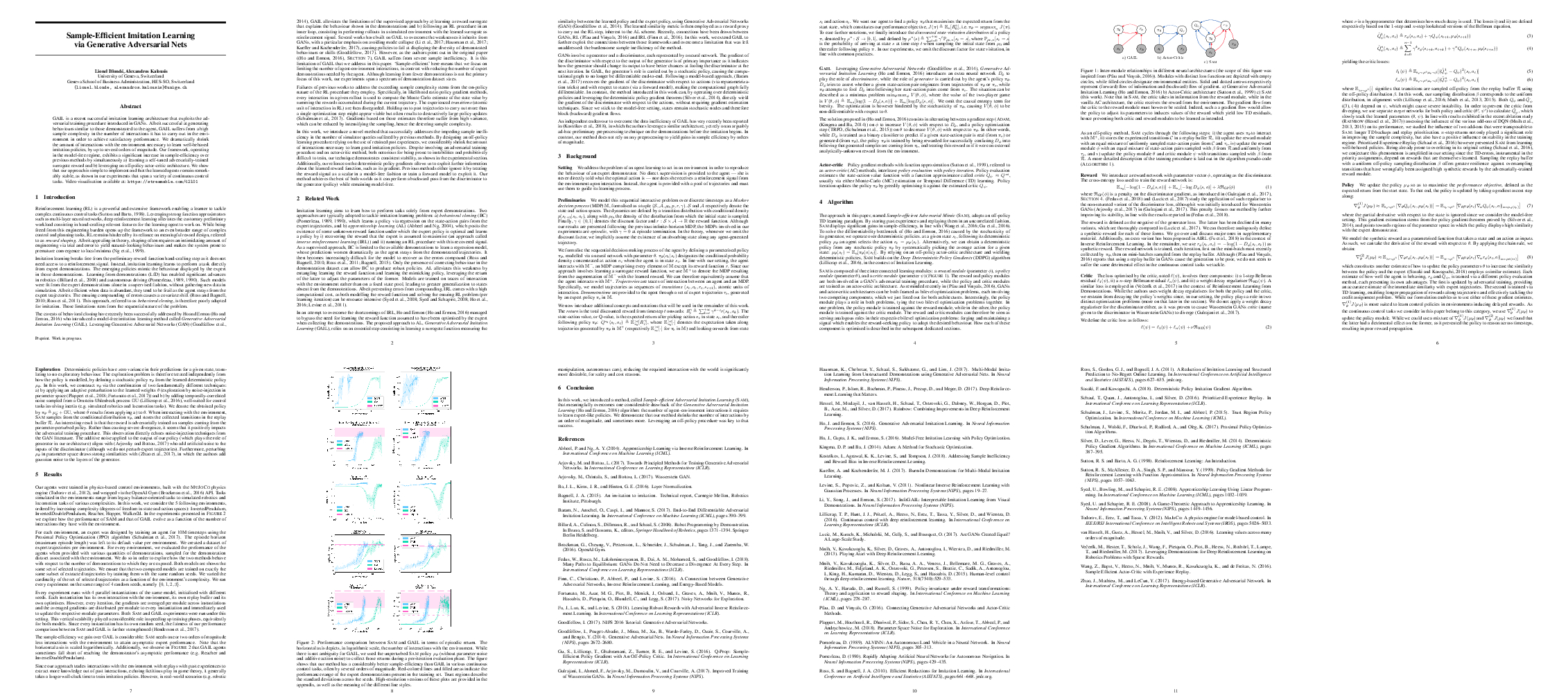

Inter-module relationships in different neural architectures (the scope of this figure was inspired from [3]). Modules with distinct loss functions are depicted with empty circles, while filled circles designate environmental entities. Solid and dotted arrows respectively represent (forward) flow of information and (backward) flow of gradient. a) Generative Adversarial Imitation Learning [1] b) Actor-Critic architecture [5] c) SAM (this work).

Sample-efficient Adversarial Mimic (SAM)

Results

Performance comparison between SAM and GAIL in terms of episodic return. The horizontal axis depicts, in logarithmic scale, the number of interactions with the environment.